I’m looking for more best development practices ideas, future direction information and some bits on existing subsystems so I expect to spend a chunk of today watching sessions from 2018 ‘Unite’ conferences and related video segments. I’ll likely add links and comments here as I run through them…

Yesterday

Yesterday I watched a bunch of videos roaming around the unity event system (a bit disappointing), possible enhanced event systems (pretty interesting), futures (ECS and the job system) and some singleton/scriptable object bits. I ended with a unite session that also touched on some best practices areas where I want to see more.

Unite Austin 2017 – Game Architecture with Scriptable Objects – A talk by game developer Ryan Hipple from Schell Games. It covered quite a bit of best practices material with extensive suggestions for ways to leverage tools in Unity to provide developer accessible dependency injection in game internals. This talk got me started thinking that there might be a decent amount of best practices information available out there.

Unity3D Mistakes I wish I’d known to avoid – This talk covers a number of built in facilities that can provide capabilites without scripting involved that the presenter has seen many people implement in script. Understanding the tools that are already available in Unity as it currently exists is clearly worthwhile and likely a bit challenging as this is also clearly a moving target.

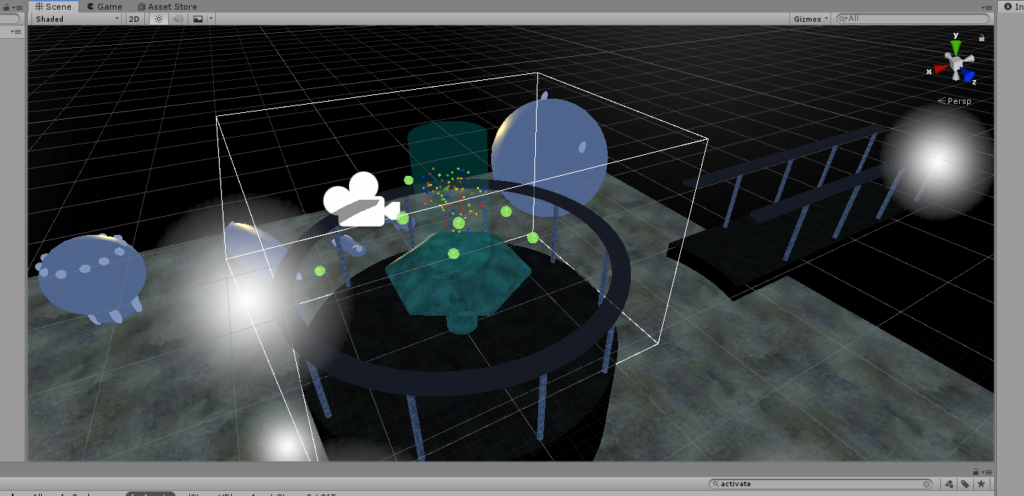

Unity ECS Basics – Getting Started – With 100,000 Tacos – A quick demonstration of the entity component system. This does seem like a nice new facility to accelerate things in Unity games. At this point (not sure about in the 2019 beta) it seems to lack physics and some other functionality that are presumably in the works. This and the jobs system appear to be the next frontier for unity game development.

Today

I expect to poke into scriptable objects, perhaps a little ECS and Job stuff, as much best practices advice as I can find (likely a bunch of unify sessions) and some serialization (particularly saved game issues where the saved game can be sent to a web server) and web remoting if I get to that.

Introduction to Scriptable Objects – Looking at the Unity provided introduction to scriptable objects. These seem to be largely game objects without transforms essentially. Data wrappers that can hold game related information without pulling in the rest of the overhead of a full game object.

Interesting serialization blogs here and here and here .

Serialization in-depth with Tim Cooper: a Unite Nordic 2013 presentation -Hopped over here from the above presentation…older but still interesting. Shows some of the attribute controls that make finer grained serialization possible.

I suspect that much of this material has evolved since 2013 but I’m looking at this as a baseline and some visibility into the back-ends of things. This talk provides lots of detail about the advantages of using scriptable objects for stored data in a unity project. They are far more reliably serializable than game objects and editor to game transitions won’t properly save and restore game objects in many cases.

The presenter is also discussing custom editor UI implementation. Look at CustomPropertyDrawer. Need to look into assets and SubAssets, [serializable] and asset trees. AddObjectToAsset(…). Need to remember HideFlags. Interesting items: HideAndDontSave. Selection.ActiveGameObject().

Best Practices for fast game design in Unity – Unite LA – More best practices. This one is oriented towards fast prototypes of mobile games so I’m suspecting that it won’t apply well to my personal use-cases.

Mentioned AssetForge as a way to build models. Potentially interesting. Houdini. mentioned. Jenkins builds for Unity games also mentioned. Cinemachine Odin Amplify Shader Effectcore.

Unite 2016 – Overthrowing the MonoBehaviour Tyranny in a Glorious Scriptable Object Revolution – Talk by the head of sustaining engineering at Unity. A detailed look at ScriptableObject. I feel as if I’m definitely getting closer to understanding how things hang together…not there yet, but closer.

Scriptable object don’t get most callbacks. Same type in the core system but cannot have gameobject or transform attached. Scriptable objects don’t have to get reset during play mode transitions. OnEnable, OnDisable and OnDestroy only. ScriptableObject.CreateInstance<>(). AssetDatabase.CreateAsset() and AssetDatabase.AddObjectToAsset(). [CreateAssetMenu]

Empty scriptable objects as extensible enums. Derivation from a common base? JsonSerializable by JsonUtility and as built-ins. Look at LoadAsset<>(“”); FronJsonOverwrite(json, item); Can patch part of an object with this.

Example of building a robust singleton ScriptableObject that recovers properly on reload. The demo project here looks kind of interesting…on bitbucket.

StartCoroutine() looks interesting.

Interesting session. Getting enough food for thought to make it worth mucking about with some code soon.

Unite ’17 Seoul – ScriptableObjects What they are and why to use them – The next year’s talk on scriptable objects…

AddComponent() for monobehavior. Monobehavior is always attached to a gameobject and transform along with any other components attached. [Range(min, max)] as ranged variable. Look at CreateAssetMenu attribute. Custom property drawer.

ScriptableObject.CreateInstance()

AssetDatabase.CreateAsset() or AddObjectToFile()

Diablo: A Classic Game Postmortem – A nice break hearing the lead developer of Diablo talk about the development of the game…

I also watched a session on game pitches that I never expect to have a use for. Playing with games in my spare time appeals, but I can’t currently see any chance that I’d want to try working in the industry.

Interesting blog post What is the purpose of ScriptableObject versus normal class? Talks a bit more about scriptable objects.

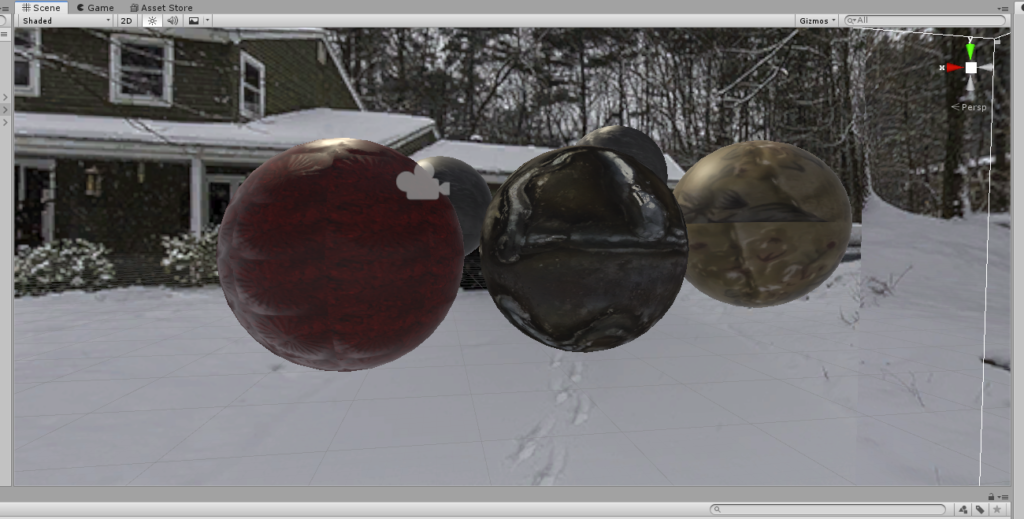

Fast worldbuilding with Unity’s updated Terrain System & ProBuilder – Unite LA – Interesting items here as terrain and such makes for interesting tactical and strategic games. Assets, tiles and patches oh my!

A rather cool set of tools I had not encountered before. Looks like an amazingly quick way to create credible terrain. Got to look at some of the relevant demos as well.

Probuilder looks like an interesting tool for creating geometry and painting geometry. All free asset store items. Probuilder, Progrids, Polybrush. Currently available in the package manager except for the brush. Looks as if this provides a good bit of capability to create and modify geometry in the smaller scale than the terrain tool. ProBuilder-API-Examples looks like the next step to allow this stuff to happen at runtime.

Introducing the new Prefab Workflow – Unite LA – The first in a set of ‘Editor Sessions’ grouped on youtube. I don’t expect to have time tonight to watch all eight of these, but hopefully I’ll get to them in the near future.

These are the workflows that exist in 2018.3 that we’re currently working with so this is likely to be very relevant.

First item is true prefab nesting support. Variants were mentioned, but I think they’ve been around longer than the nesting support. Prior versions appear to have exploded prefabs within prefabs when apply was clicked. Variants as derived prefabs essentially…the overidden parameters stay overidden but changes to the base propagate.

What are prefab models? Blue cube with a page symbol. Looks like they’re an early import thing for models coming in from outside. Ah…looks as if variants allow external meshes to be imported in a way that they can still be replaced after the prefab is rigged.

Improved Prefab Workflows: the new way to work with Prefabs – Unite LA –

Material I Don’t Expect to get to Tonight…