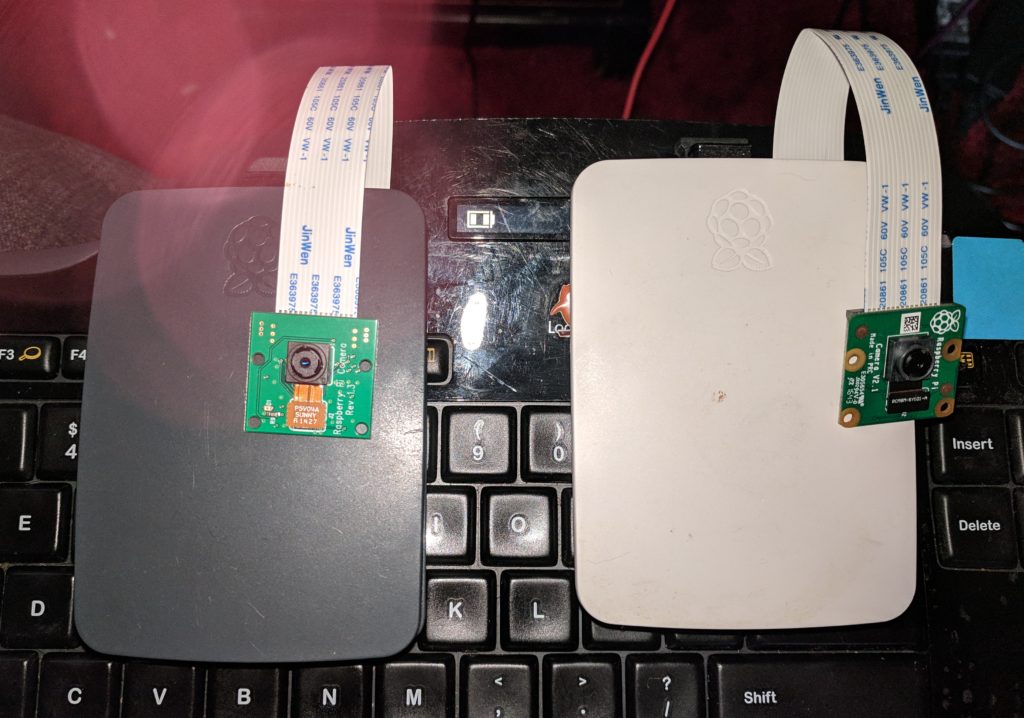

Configuring Eye cameras here.

Playing with the evaluation version of this.

Malcolm and I spent part of Friday experimenting with an evaluation version of a commercial motion capture package. I now have six PS3 Eye cameras and my VR machine has enough USB-3 ports and controllers installed to run them.

Our initial setup had three or four cameras attached and used the room lighting (pretty bright, ceiling mounted LED lights) for illumination. Initial results weren’t great but over the rest of the day we learned a few things.

- Need a more contrasty background and a less cluttered background. The bookcases behind the area we were using were better when covered with a piece of white fabric. The tan rug on the floor was less of a problem when the model put on black socks.

- Hands really aren’t handled by the package. No big surprise here as hands and fingers are rather small targets for these cameras.

- More lighting is better. I added two diffused studio lights I have around and a high intensity three light halogen light bar and things became more precise.

- A larger and more diffuse calibration target seemed to work better.

- Sliding the calibration beacon along the floor with periodic stops seemed to work better than touching it to the floor. This makes all floor level reference spots about equal (when I was touching it down, it took a little work to make sure we had the lowest spot in each arc).

- Aligning the reference person with the human figure in the images at the start helped quite a bit. The tool didn’t seem to do a very good job of this without manual help.

By the end of the session, we seemed to be getting a pretty good capture of arms and legs. Feet could still be a bit twitchy.

Malcolm is going to look at 3D printing mounting clips to attach the PS3 Eye cameras to light stands for more stability.

I ordered a couple of spare cameras to ensure that we don’t come up short if any of them fail and a couple of PCIe USB-3 cards to supplement USB controller availability.

Overall things turned out pretty well and I think we learned a bit more about making motion capture without dedicated beacons work decently. The price of the package is high enough that even a short time license would need us to have some substantial amount of motion capture to get done in order to make things make sense.